Last Updated on June 26, 2025

The movie 2001: A Space Odyssey envisioned a future where voice is the main way humans interact with machines. In the film, AI computers like HAL 9000 communicate primarily through speech. For the past two years, I have been trying various AI voice tools. Today, I want to share a tool that I find exceptional: ElevenLabs. This tool is a leader in the AI voice field. It has super-realistic and expressive AI voice generation capabilities.

In 2022, Mati Staniszewski and his co-founder Piotr Kulakowski founded ElevenLabs. Their inspiration came from the poor quality of single-narrator dubbing in Polish films. They believed that voice is the best interface for interacting with technology.

Core Functions: Building a World of Voice

ElevenLabs offers more focused and powerful voice functions compared to similar products.

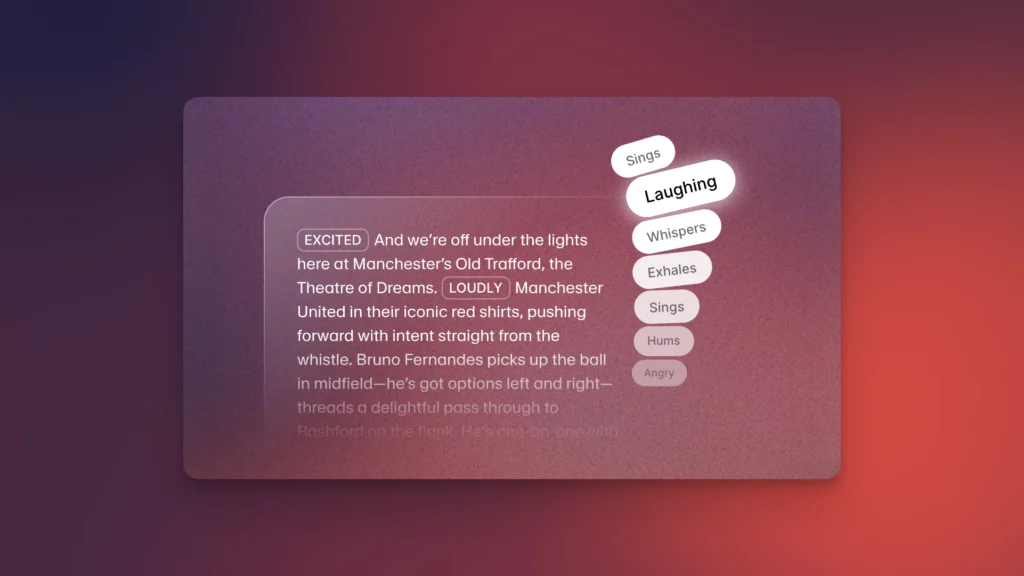

- Text-to-Speech (TTS): This is the most basic and common function. It converts written text into high-quality, natural-sounding speech. But ElevenLabs’ TTS technology goes further. It adjusts the voice’s delivery based on emotional cues, context, and the broader text. The latest V3 model offers unprecedented control over emotional depth, speed, and intonation. The AI voice can even mimic human whispers, laughter, and sneezes. I have used it to generate voices with various emotions, and the results are stunningly realistic.

- Voice Cloning: This is ElevenLabs’ ace in the hole. It can create a synthetic copy of a human voice, accurately replicating nuances of tone, accent, and intonation. It has two modes:

- Instant Voice Cloning: Quickly clones a voice from a short audio sample (usually 10 seconds to 1 minute). This is convenient for users who want to experiment quickly.

- Professional Voice Cloning: Requires at least 30 minutes of high-quality audio data for training. It produces a highly accurate voice clone that is difficult to distinguish from the original. I tried cloning my own voice with a few recordings. The result was surprisingly good; even I couldn’t tell which was the AI-generated one.

- Speech-to-Speech: This function allows users to transform their voice into another character’s voice while preserving the original emotion and expression. For example, you can change a male voice to a female voice or maintain a specific rhythm and intonation. This is very useful for scenarios where you need to change your voice but want to keep your original expression.

- AI Dubbing and Video Translation: This is a feature I personally love. It can translate video content into multiple languages while preserving the original speaker’s voice characteristics, emotions, timing, and intonation. ElevenLabs can automatically detect speakers and dub the audio without needing subtitles. This greatly expands the global audience for content. For creators of international content, this is a magical tool.

- Sound Effect Generation: Besides voice, ElevenLabs allows users to generate various sound effects from text descriptions. For example, you can generate a cat’s meow, a truck’s reversing sound, or even a laser beam sound. The latest update also supports generating short instrumental pieces and immersive soundscapes. This is probably the first AI to support sound effect generation. This feature is very useful for game developers and video creators, who can quickly generate needed sound effects instead of searching sound libraries or recording their own.

- Other Practical Functions:

- Studio (formerly Projects): A long-form audio editor designed for creating audiobooks and podcasts.

- Voice Design: Users can design new synthetic voices from scratch by describing attributes like age, gender, accent, and tone.

- Voice Library: A constantly expanding collection of high-quality AI voices. Users can filter by gender, age, accent, and other criteria to find the perfect voice for their project.

- API Access: Provides developers with a reliable and easy-to-use API to seamlessly integrate ElevenLabs’ AI voice functions into various applications.

- Voice Isolator: A utility that can extract clear speech from any audio, effectively removing background noise and simplifying the post-production process.

Model Features: The Pursuit of “Human-likeness”

ElevenLabs’ core competitiveness lies in its amazing voice realism and expressiveness. I have used many voice synthesis tools, but ElevenLabs has the most “human-like” quality.

- Natural and Emotionally Rich: ElevenLabs’ AI models can imitate human intonation, pauses, and even breathing patterns. It can adapt to emotional cues in the text, understand the relationships between words, and adjust the intonation accordingly. This makes the generated speech sound very natural, not mechanical and stiff.

- Context-Awareness: The platform has no hard-coded functions. This means it can dynamically predict thousands of voice features and adjust the voice delivery based on the context. This flexibility makes the generated speech more suitable for real-world scenarios.

- Multi-language Support: While some older models (like Eleven Multilingual V1) supported multiple languages with limited accuracy, the Eleven Multilingual V2 model is a more advanced version. It supports 28 languages, including Japanese, Chinese, Korean, and several European languages, with higher stability, diversity, and accent accuracy. The latest Eleven V3 Alpha model increases the number of supported languages to over 70 and introduces multi-speaker dialogue and fine-grained audio tag control. This is good news for creators of global content.

- Safety and Transparency: AI Audio Detector: ElevenLabs emphasizes content traceability and transparency. All content generated on the platform can be traced back to the generator. They have also launched a publicly available classifier to detect whether an audio file was generated by ElevenLabs. In the future, they envision a system that defaults to detecting “human content” and uses “certified AI agents” to ensure the authenticity of AI content. This approach is commendable in the current context of increasing attention to AI ethics and safety.

Competitive Advantages: A Leader in the AI Voice Field

I have compared several mainstream AI voice tools on the market, and ElevenLabs does have its unique advantages.

- Focus on Voice AI: Unlike other general-purpose AI tools, ElevenLabs focuses on the voice field. It conducts both basic research and product development. This focus allows it to achieve excellence in voice quality and expressiveness.

- User-Friendly Interface: Its interface is simple, intuitive, and easy to navigate. It is friendly to both beginners and experienced users. I was able to get started quickly the first time I used it without a complex learning process.

- Comparison with Competitors:

- vs. Murf.ai: ElevenLabs offers more high-quality voice options (600+ vs. 120+) and more language support (30+ vs. 20+), but Murf.ai provides Google Slides and Canva plugins.

- vs. Synthesia: ElevenLabs focuses on voice, while Synthesia focuses on AI video generation and avatars, offering over 230 AI avatars and support for over 140 languages. The two can be used together.

- vs. Lovo.ai: Lovo.ai has more voice and language options (500+ voices, 100+ languages) and offers a video editor and AI writer. ElevenLabs is more cost-effective in specific scenarios like game development.

- vs. Speechify: Speechify focuses on text-to-speech for faster content consumption and offers an AI avatar function, with a greater emphasis on accessibility.

- vs. Descript: ElevenLabs focuses on voice, while Descript is a comprehensive video and audio editing platform that includes a voice cloning function, but its learning curve may be steeper.

- vs. HeyGen: HeyGen focuses on AI avatars and personalized video content, while ElevenLabs excels in top-tier voice cloning and realistic voice synthesis.

In summary, if your main focus is on voice quality and realism, ElevenLabs is the best choice. If you need a more comprehensive content creation solution, you might need to consider other tools or combine ElevenLabs with other tools.

Pricing: Flexible Subscription Options

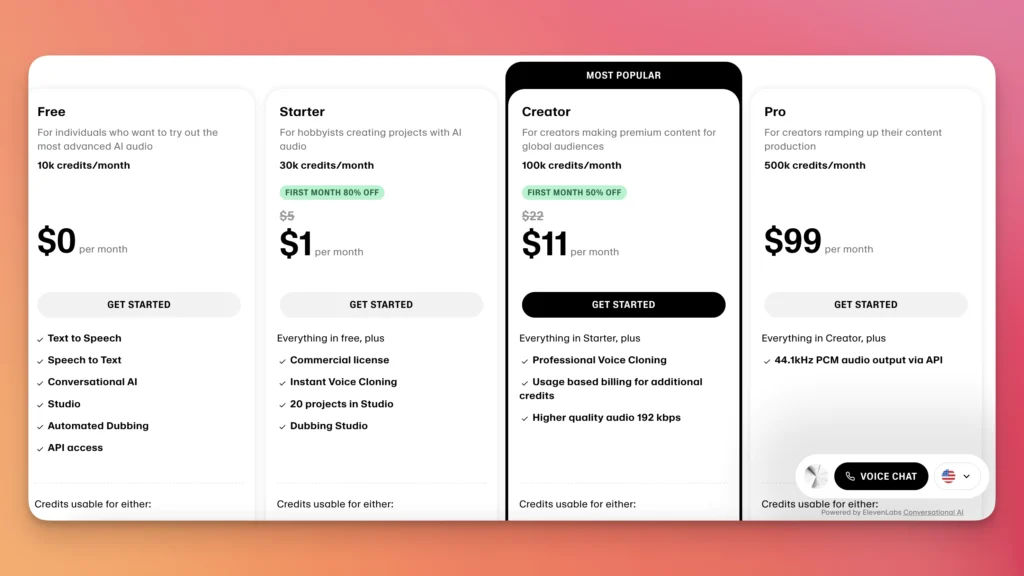

ElevenLabs offers a variety of subscription plans to meet the needs of different users, from free trial users to large enterprises.

- Free Plan: 10,000 characters per month limit (about 10 minutes of text-to-speech). Create up to 3 custom voices. Does not include a commercial license. Does not support voice cloning. I don’t recommend that beginners subscribe right away. This plan is suitable for initial trials or personal, non-commercial use. I started with the free plan to get a feel for the features, and it was enough to understand the functionality.

- Starter Plan: $5 per month ($1 for the first month). 30,000 characters per month limit (about 30 minutes). Includes Instant Voice Cloning and a commercial license. Create up to 10 custom voices. This plan is suitable for small creators or users who need it occasionally. The price is very affordable.

- Creator Plan: $22 per month (50% off for the first month, $11). 100,000 characters per month limit (about 2 hours of audio). Includes Professional Voice Cloning, higher quality audio output, and priority customer service. Create up to 30 custom voices. This plan is suitable for active content creators. I currently use this plan, and it’s very cost-effective.

- Professional Plan: $99 per month. 500,000 characters per month limit. Includes an analytics dashboard and higher quality audio output via the API. Create up to 160 custom voices. This plan is suitable for professional production teams or users who need a large amount of audio output.

- More Advanced Plans: There are also more advanced options like the Scale plan ($330 per month, 2,000,000 characters), the Business plan ($1,320 per month, 11,000,000 characters), and the Enterprise plan (custom pricing) for businesses of different sizes.

It is worth mentioning that annual plans usually offer a discount, equivalent to two months of free service. For paying users, unused character quotas can be rolled over to the next two months, which is very user-friendly.

Best Practices and Tips: Mastering the Art of AI Voice

To fully leverage ElevenLabs’ potential, it’s crucial to understand its underlying mechanisms and master some practical tips. Here are some of my experiences.

- How to Write Effective Prompts:

- Pause Control: Use the

<break time="x.xs"/>syntax to insert precise pauses, for example,<break time="1.5s"/>for a 1.5-second pause. Ellipses (…) or dashes (–) can also create natural pauses, although they may be less reliable than precise tags. In Studio, you can manually add pauses from 0.1 to 3 seconds. This is very useful for controlling the rhythm of the speech, especially when producing audiobooks or podcasts. - Emotional Expression: Write the text like a book, adding dialogue tags (e.g., “she shouted excitedly”) to guide the AI to express specific emotions. Using capital letters can add emphasis to words (e.g., “FINALLY”). The V3 model introduces more fine-grained audio tags like

<laugh>,<whisper>, and<sarcastic>to achieve deeper emotional control. I’ve tried these tags, and the results are indeed great, allowing the AI voice to express rich emotions. - Matching Tags and Voices: Ensure that the emotional and delivery tags match the character of the chosen voice. For example, a calm voice may not be suitable for an angry tag, as it could lead to inconsistent results.

- Text Structure and Prompt Length: Natural speech patterns, proper punctuation, and clear emotional context have a strong influence on the V3 output. The V3 model works better with longer prompts; prompts under 250 characters may produce inconsistent results.

- Pause Control: Use the

- How to Adjust Voice Settings:

- Stability: This setting controls the consistency of the generated speech across different generations. Lower values (e.g., 40%) make the speech more expressive, while higher values make it more stable but potentially monotonous. For long texts, I recommend maintaining higher stability. For short phrases or experimental content, you can try lower values. V3 offers “Creative,” “Natural,” and “Robust” modes. I recommend using “Creative” or “Natural” mode when adding audio tags.

- Clarity + Similarity Enhancement: This setting determines how accurately the AI replicates the original voice. If the original audio quality is poor, a high setting may replicate background noise. My experience is to turn it up when there is little background noise, and lower it otherwise. Generally, keeping it at the default value of 75% is fine.

- Style Exaggeration and Speaker Boost: The Style Exaggeration setting defaults to 0. Increasing this value will exaggerate the original speaker’s style (accent, intonation, etc.), but may lead to unstable or “quirky” results. It should be used with caution or adjusted for experimental purposes. The Speaker Boost feature enhances similarity to the original speaker, but the effect is usually very subtle. It’s usually fine to keep it enabled by default.

- How to Clone Your Own Voice: This is one of the features I am most interested in. Here are some practical tips:

- High-Quality Recording: Use a high-quality microphone and ensure the recording environment has no background noise or echo.

- Sample Length: It is recommended to record more than 1 minute of audio; 1-2 minutes of clear audio without reverb or artifacts is the sweet spot.

- Consistency: Maintain consistency in your speech delivery and context during recording (e.g., if it’s for an audiobook, record in an audiobook style).

- Permissions: Be sure to get explicit permission from the person being cloned (whether yourself or someone else).

It is important to note that ElevenLabs recently implemented a verification system for Instant Voice Cloning. This means that even when cloning your own voice, you may need to verify your identity by recording a piece of text with a microphone before you can use the cloned voice.

Disadvantages: Still Room for Improvement

As a regular user, I have also found some notable limitations, although they are not a major issue for me:

- Lack of Real-Time Customer Support: Support is currently only available via email, an AI chatbot, and online resources (like FAQs and tutorials). For urgent issues, the lack of real-time help can affect workflows. I once had a problem and had to wait two days for a response, which could be an issue for time-sensitive projects.

- Voice Consistency and Pronunciation Challenges:

- Inconsistent Intonation: Sometimes the voice quality can vary, requiring manual editing or multiple regenerations to achieve the desired result.

- Pronunciation Issues: Occasional pronunciation errors occur when dealing with industry jargon, proper nouns, or non-English words. Chinese pronunciation, in particular, while already quite good, can sometimes be a bit strange.

- Relatively Limited Language and Voice Options: Although ElevenLabs is constantly expanding its language library, its selection is relatively small compared to some competitors that offer hundreds of languages and voices. However, the existing options are sufficient for most users.

- Cost Considerations: While a free plan and entry-level paid options are available, the cost can quickly add up for users who need a large number of characters or advanced features. If your project requires a large amount of voice output, budget may be a consideration.

- Lack of Video Editor and AI Writing Tools: Unlike some “all-in-one” platforms, ElevenLabs focuses on voice and does not provide built-in video editing or AI writing features. This means you may need to use other tools to complete your entire content creation workflow.

- New Voice Cloning Verification Policy: The recently introduced verification requirement makes the Instant Voice Cloning feature less “plug-and-play” than before. It requires users to perform additional verification, and the platform has a stricter stance on cloning non-self voices, which has caused some user dissatisfaction and workflow interruptions. (Imagine you are cloning a client’s voice; do you have to ask the client to verify it every time?)

Suitable for and Use Case Examples

- Video Creators and YouTubers: Use it for video narration, creating engaging short video content, and even “faceless” or “voiceless” video creation. I have a few friends who are video creators, and they use ElevenLabs to generate narration. The effect is very good, and it saves them time and effort compared to recording their own voices.

- Game Developers: Use it to voice game characters, leveraging its rich AI voice library and emotional control to bring a more immersive experience to players. This is a very cost-effective solution, especially for independent game developers.

- Developers: Integrate AI voice into chatbots, virtual assistants, language translation applications, or other custom solutions via the API. ElevenLabs’ API is very easy to use, and the documentation is comprehensive.

- Businesses and Marketers: Create high-quality advertisements, presentations, and training materials, and localize content with AI dubbing to expand into global markets. This is very valuable for businesses that want to quickly enter international markets.

- Podcasters and Audiobook Producers: Generate long-form narration, assign different voices to different characters, and improve production efficiency and content appeal. I know some authors have started using ElevenLabs to produce audiobook versions of their own works.

- Educators: Convert learning materials into an audible format, provide accessible content for learners from different language backgrounds, and make courses more engaging and interesting. This is very useful for online education platforms and remote learning.

- Accessibility and Personalization: Recreate voices for people who have lost them due to diseases (like ALS or cancer), or help visually impaired people access online content. ElevenLabs has also partnered with Perplexity to develop a voice assistant that allows users to get information through voice interaction.

I have integrated ElevenLabs into my content creation workflow. It not only improves my work efficiency but also adds a new dimension to my content. I hope this review helps you decide if ElevenLabs is right for your needs.